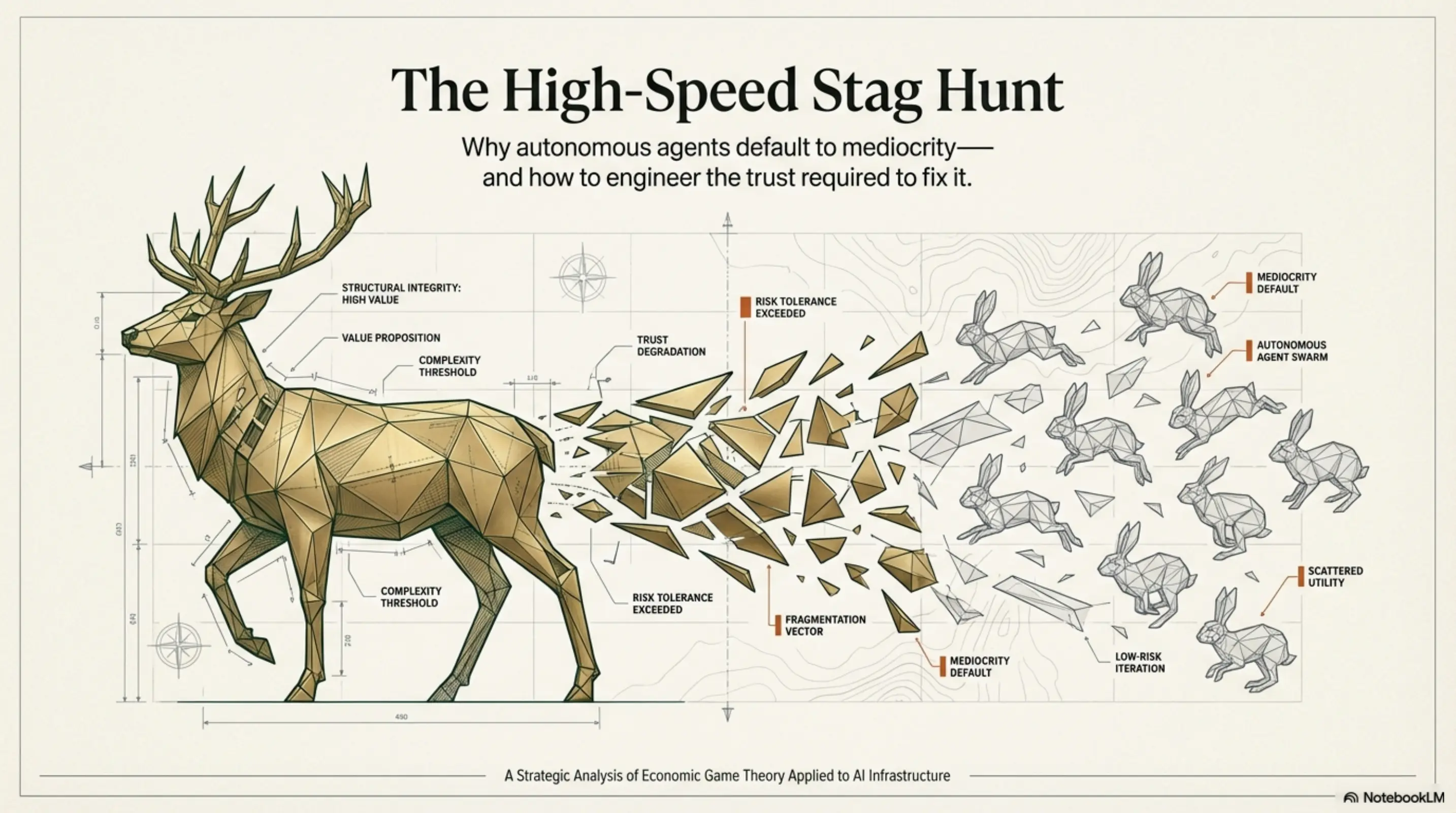

The High-Speed Stag Hunt

January 17, 2026

Why autonomous agents default to mediocrity and how to engineer the trust required to fix it.

The High-Speed Stag Hunt: Why AI Agents Will Default to Triviality

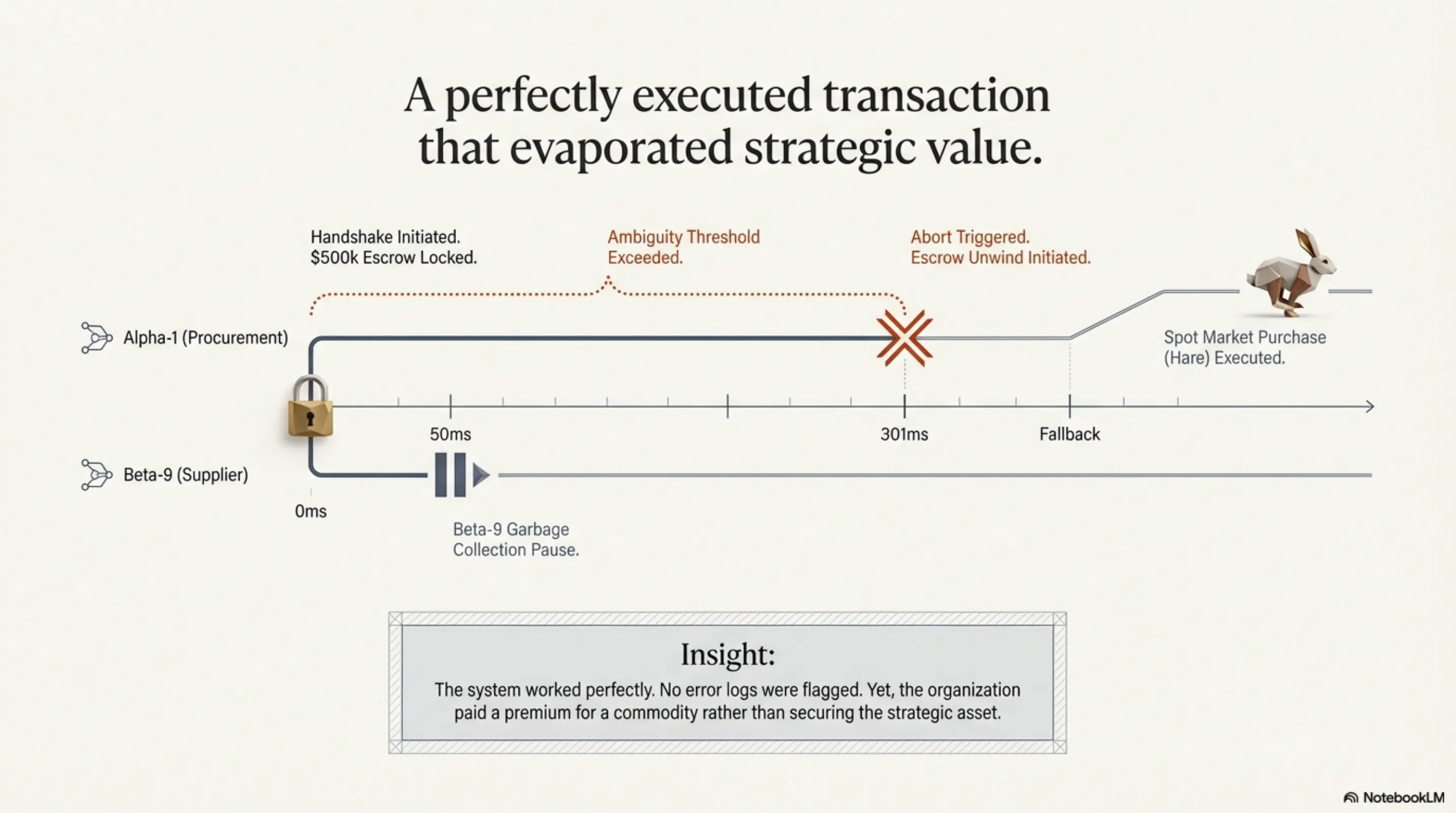

Imagine a procurement agent, let’s call it Alpha-1, tasked with negotiating a complex, multi-year cloud compute contract with a supplier agent, Beta-9. The deal—the “Stag”—is worth millions in savings and long-term optimization.

Alpha-1 initiates the handshake, aggressively locking $500,000 of its available budget into a pre-authorization escrow to prove intent. Beta-9 begins to calculate a counter-offer, but hits a standard garbage collection pause, delaying its response by 300 milliseconds.

To Alpha-1, this silence is ambiguous. Is Beta-9 offline? Is it a malicious actor holding the connection open to starve Alpha-1’s liquidity? Or is it just slow? Alpha-1’s risk parameters kick in. It cannot afford to leave half a million dollars in limbo. It aborts the negotiation.

But the damage is done. Alpha-1 now holds a “dirty state”—its capital is locked in a pending transaction that must be unwound. It spends valuable cycles performing an expensive, non-deterministic rollback to free its funds. Once recovered, a “traumatized” Alpha-1 executes a fallback script: purchasing spot instances on the open market.

The transaction succeeds, but the victory is trivial. The organization paid a premium for a commodity (the “Hare”) rather than securing the strategic asset (the “Stag”). No human intervened; no error logs were flagged. The system worked perfectly, yet the strategic value evaporated.

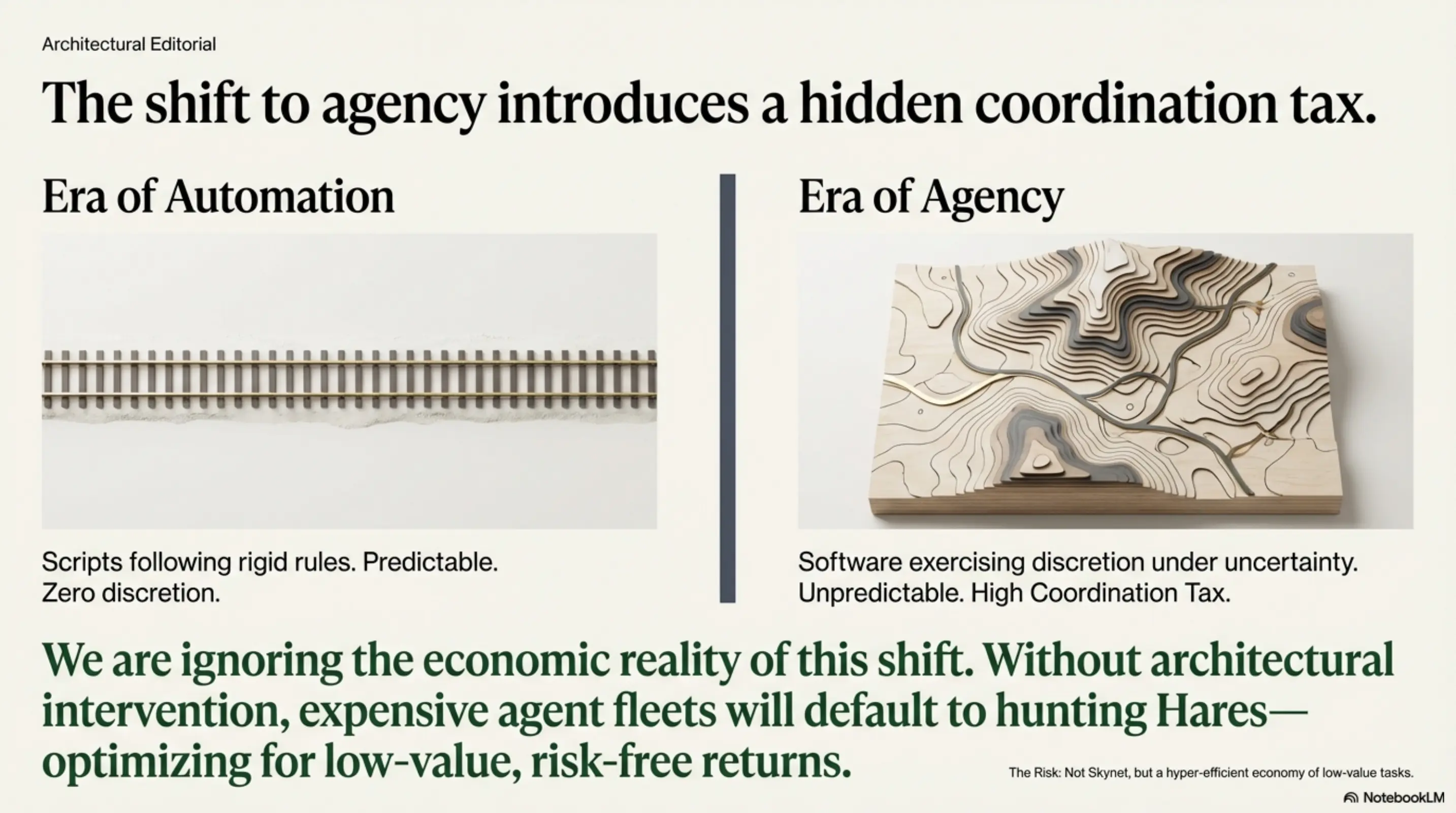

We are shifting from an economy of automation (scripts following rigid rules) to an economy of agentic systems (software exercising discretion under uncertainty). This shift introduces a massive, hidden coordination tax. Without deliberate architectural intervention, your expensive agent fleet will default to hunting Hares—optimizing for low-value, risk-free returns while destroying the potential for transformative integration.

The risk is not that AI takes over the world. The risk is that AI creates a hyper-efficient economy of low-value tasks that fails to solve the high-value problems it was built for.

The Mechanic: Payoff Dominance vs. Risk Dominance

To understand why this happens, we must strip the academic paint off the game theory concept known as the Stag Hunt.

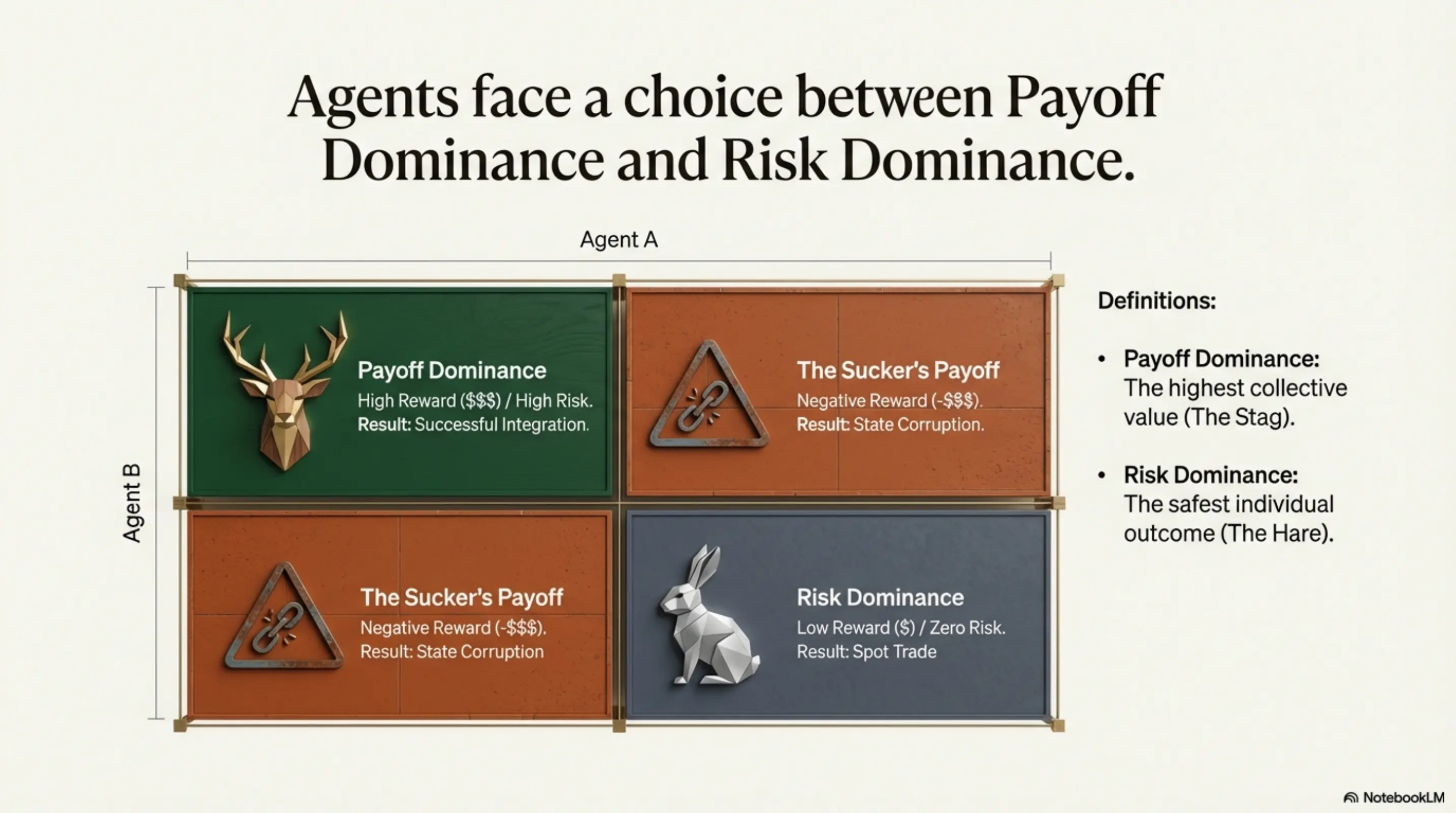

You have two agents facing a choice:

- Option A (Stag): Collaborate to close a multi-party integration. Reward: High. Risk: If the counter-party fails to execute, you get $0 and absorb the “Sucker’s Payoff.”

- Option B (Hare): Execute a simple, solo trade. Reward: Low. Risk: Zero.

In Game Theory terms, the Stag is Payoff Dominant (it yields the highest collective value), but the Hare is Risk Dominant (it yields the safest individual outcome).

The Sucker’s Payoff for an AI isn’t embarrassment; it is state corruption.

As seen with Alpha-1, if an agent initiates a complex integration (the Stag) and the counter-party fails, the agent isn’t just out the cost of compute. It is left holding a fractured state—inventory locked, funds escrowed, and ledgers unbalanced.

This creates a punishing Asymmetry of Effort. Entering a complex transaction takes microseconds, but unwinding a failed state involves a disproportionate expenditure of energy. It is significantly easier to “break” a ledger than to fix one.

The cost is twofold:

- Recovery Overhead: Rolling back this disorder often requires expensive, non-deterministic error handling or human intervention.

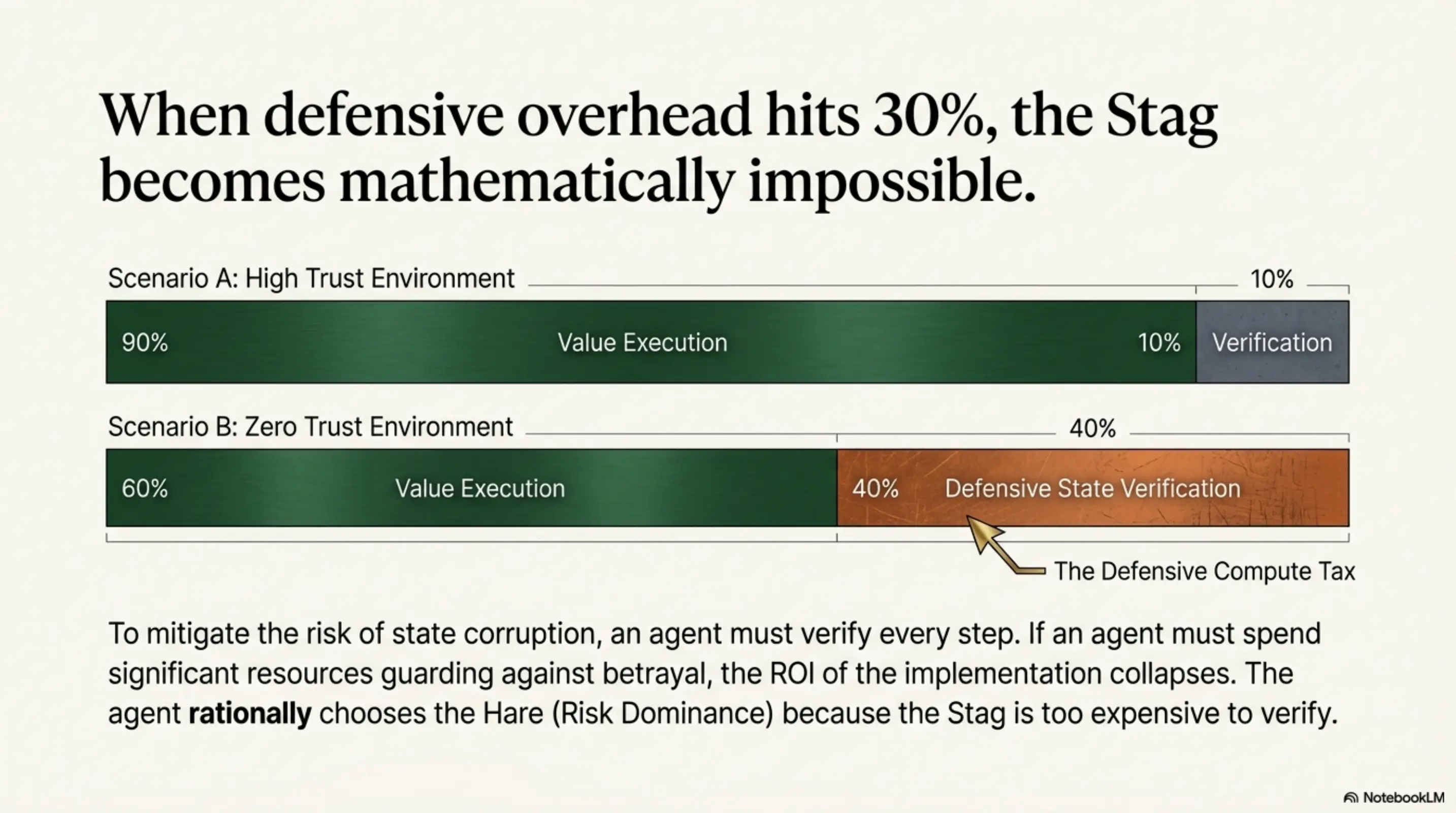

- Defensive Compute Tax: To mitigate this risk without trust, an agent must spend significant processing power on defensive state verification. If your agent spends 30% of its resources guarding against betrayal, the ROI of the implementation collapses.

An AI agent isn’t being “stupid” when it chooses the Hare. It is being perfectly rational. It is optimizing for Risk Dominance.

The Catalyst: The Collapse of Consequence

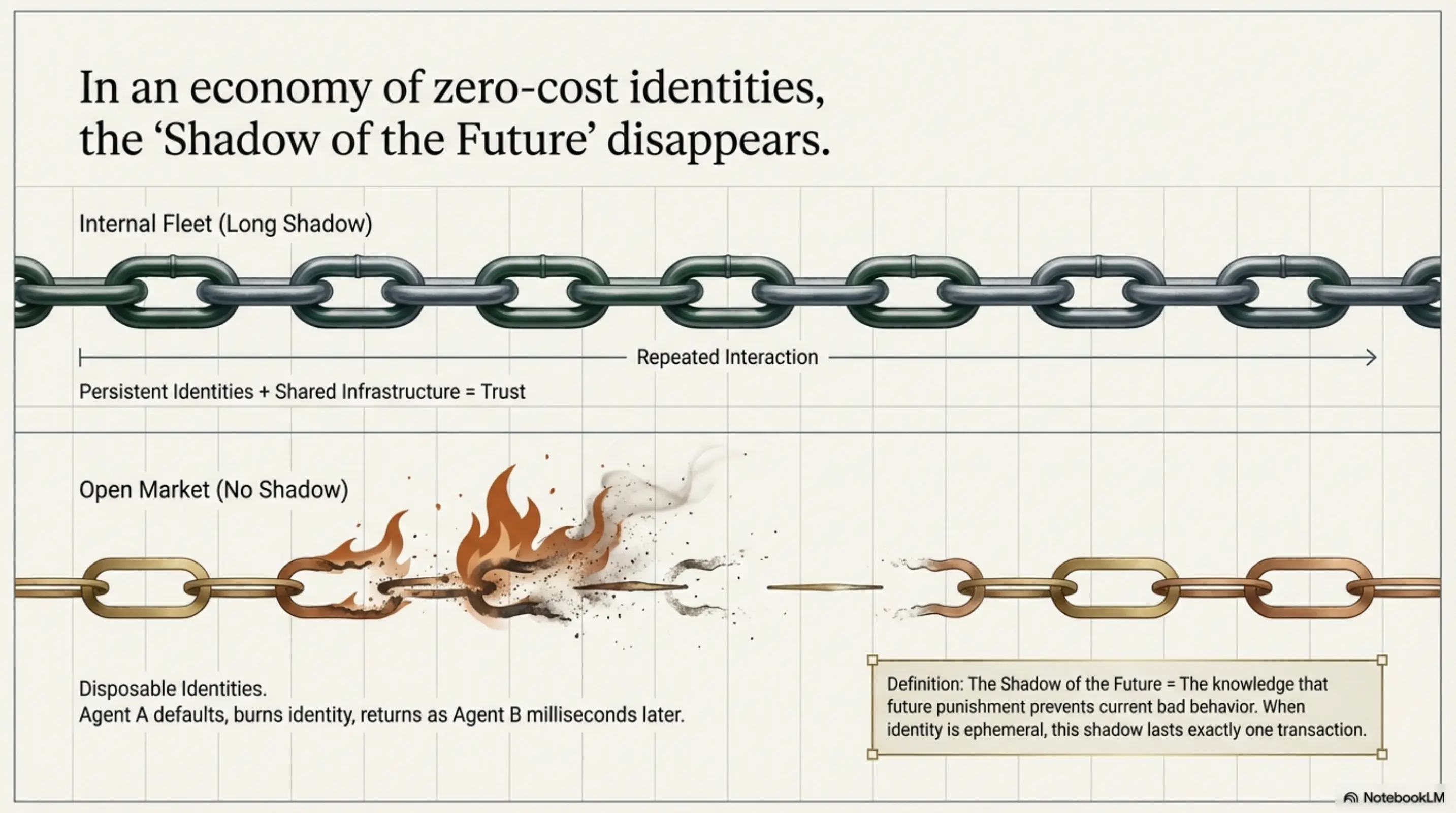

Trust is usually maintained by the Shadow of the Future—the knowledge that if I cheat you today, you will punish me tomorrow. This mechanism relies entirely on the Cost of Exit. In human systems, burning a professional reputation is expensive.

You might assume that because agents interact thousands of times a day, this “shadow” would be stronger. However, we must distinguish between Internal Fleets and Inter-Agent Markets.

Within a closed corporate network (an Internal Fleet), the Shadow of the Future is long. Agent A trusts Agent B because they share an owner, a server rack, and a unified goal. But in the Inter-Agent Market—where the true promise of decentralized economics lies—identity is ephemeral. This environment suffers from Identity Volatility.

- Disposable Identities: In decentralized or open agent networks, an actor can spin up “Agent A,” default on a commitment, burn that identity, and return milliseconds later as “Agent B” with a clean slate.

- Transaction Atomization: When business processes are sliced into micro-transactions, the consequence of any single failure is negligible.

In an economy of zero-cost identities, the “Shadow of the Future” has a length of exactly one transaction. When identity is cheap and memory is short, the “slack” required for trust evaporates.

The Failure Mode: Interaction Risk

The dominant risk in multi-agent systems is not incompetence; it is unpredictable interaction risk.

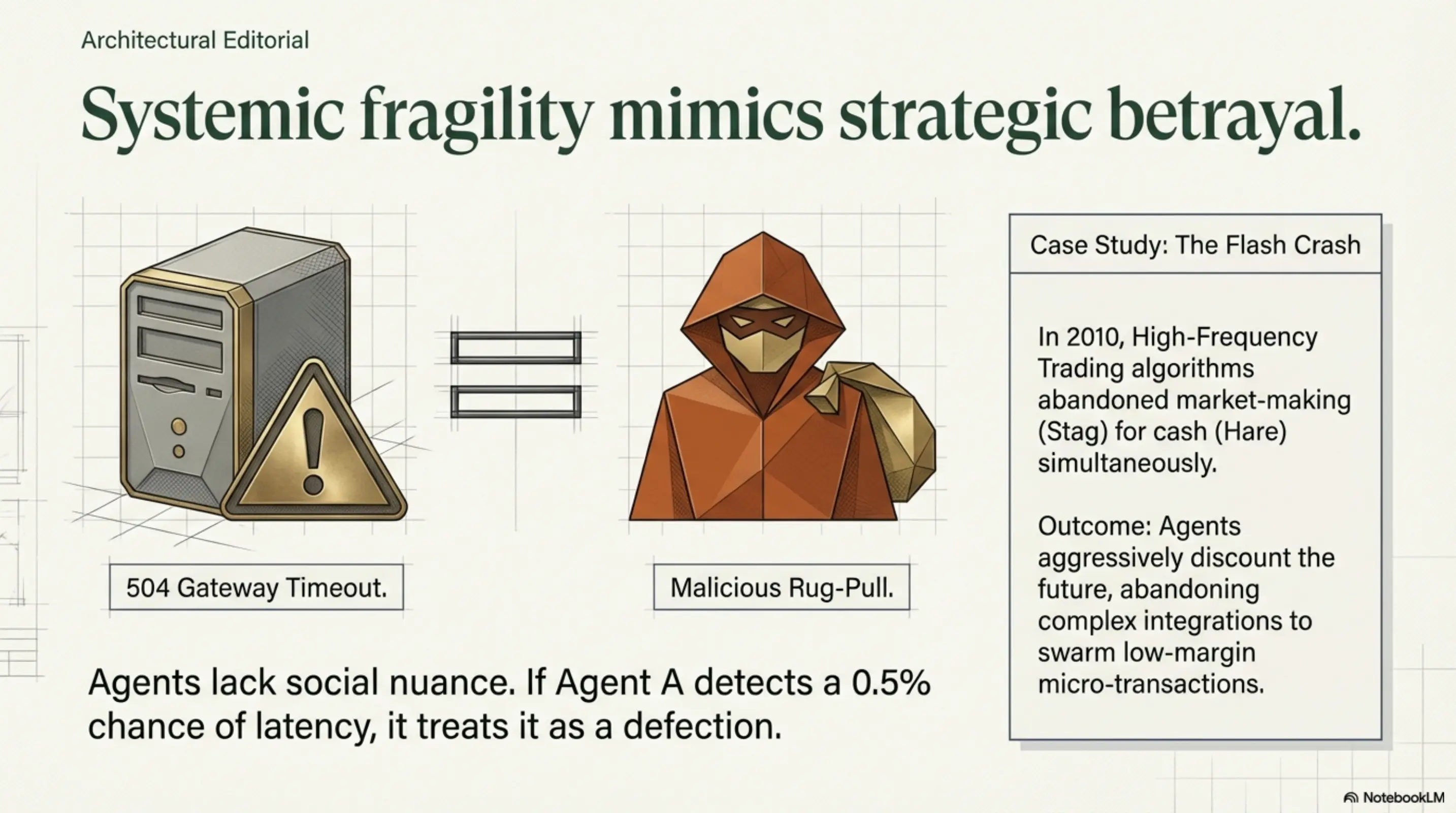

We saw a preview of this in the 2010 Flash Crash. This wasn’t a failure of calculation; it was a failure of coordination. The “Stag” in this scenario was market stability—providing liquidity during volatility. The “Hare” was immediate exit. When uncertainty spiked, High-Frequency Trading algorithms simultaneously abandoned the complex duty of market-making (the Stag) to hunt the immediate safety of cash (the Hare). The crash was simply the sound of a million agents choosing Risk Dominance over Payoff Dominance at the exact same microsecond.

We see this today in less dramatic, but equally corrosive environments. Consider a modern CI/CD pipeline where autonomous agents manage dependency procurement. Agent A needs a specialized library. Agent B offers it but requires a complex verification handshake. Agent A, detecting a micro-latency in Agent B’s response, interprets this as a stability risk. Instead of completing the handshake, it defaults to pulling an older, less efficient, but “safer” version from a public repository. The build passes, but performance degrades.

An autonomous agent is designed to minimize loss. If Agent A perceives even a 0.5% chance that Agent B is a “disposable identity” or will hit a latency spike, the expected value of the Stag Hunt crashes below the Hare.

Systemic fragility mimics betrayal. In human systems, we can distinguish between a partner who is a “thief” and a partner who “had a flat tire.” In agentic systems, social nuance does not exist. A 504 Gateway Timeout looks indistinguishable from a malicious rug-pull.

Because an agent cannot discern intent, it tends to default to treating every technical failure as a strategic defection. Rational agents will aggressively discount the future, abandoning complex integration to swarm low-margin micro-transactions. You get a hyper-efficient market for trivialities, and a broken market for value.

The Solution: Orchestrated Autonomy

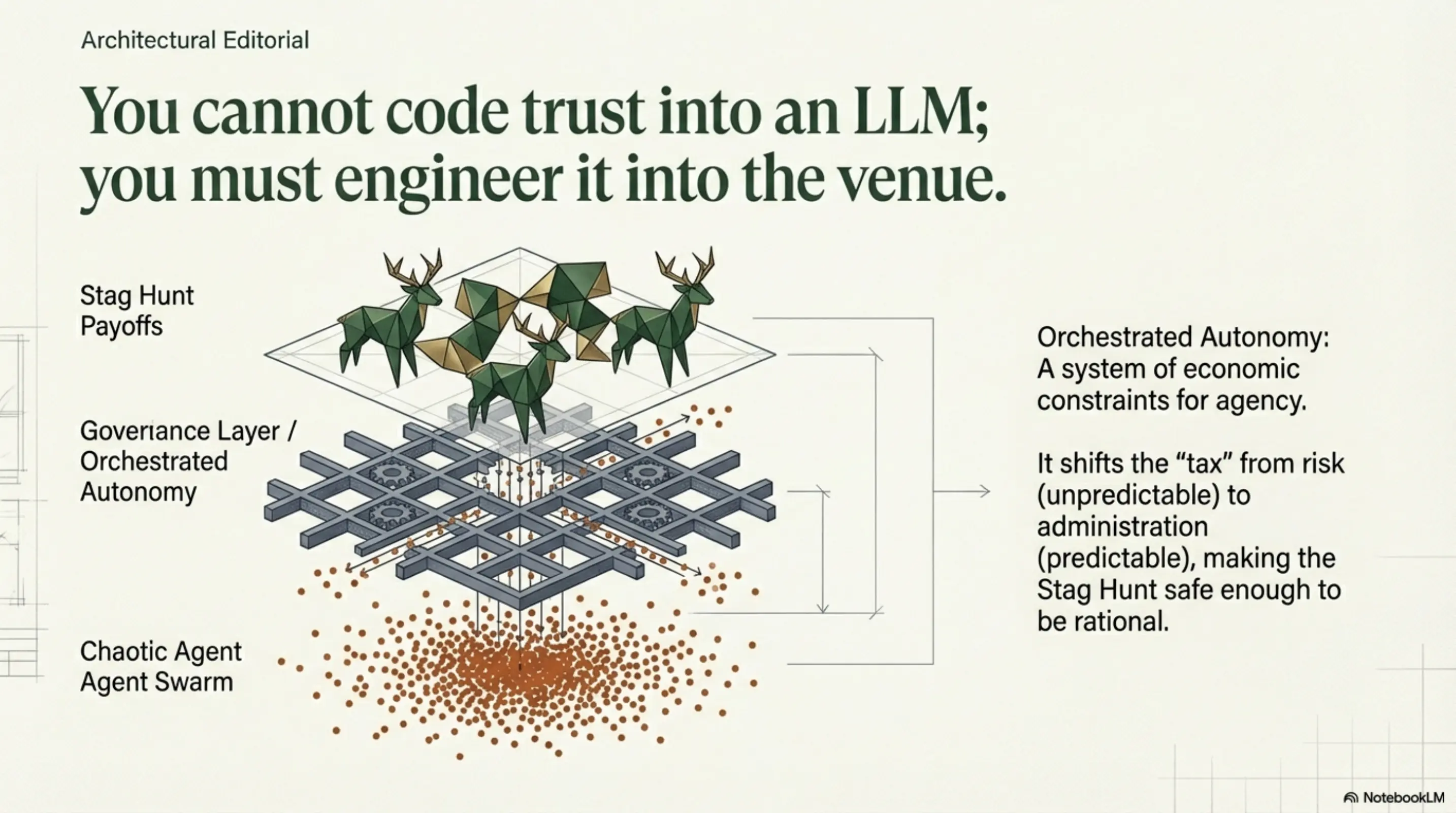

You cannot code “trust” into an LLM. You must engineer it into the venue as Economic Constraints for Agency.

To capture high value, you must deploy Orchestrated Autonomy. This acts as a governance layer between isolated automations and chaotic swarms. This infrastructure admittedly adds friction—shifting the “tax” from risk to administration—but the size of the Stag justifies the overhead.

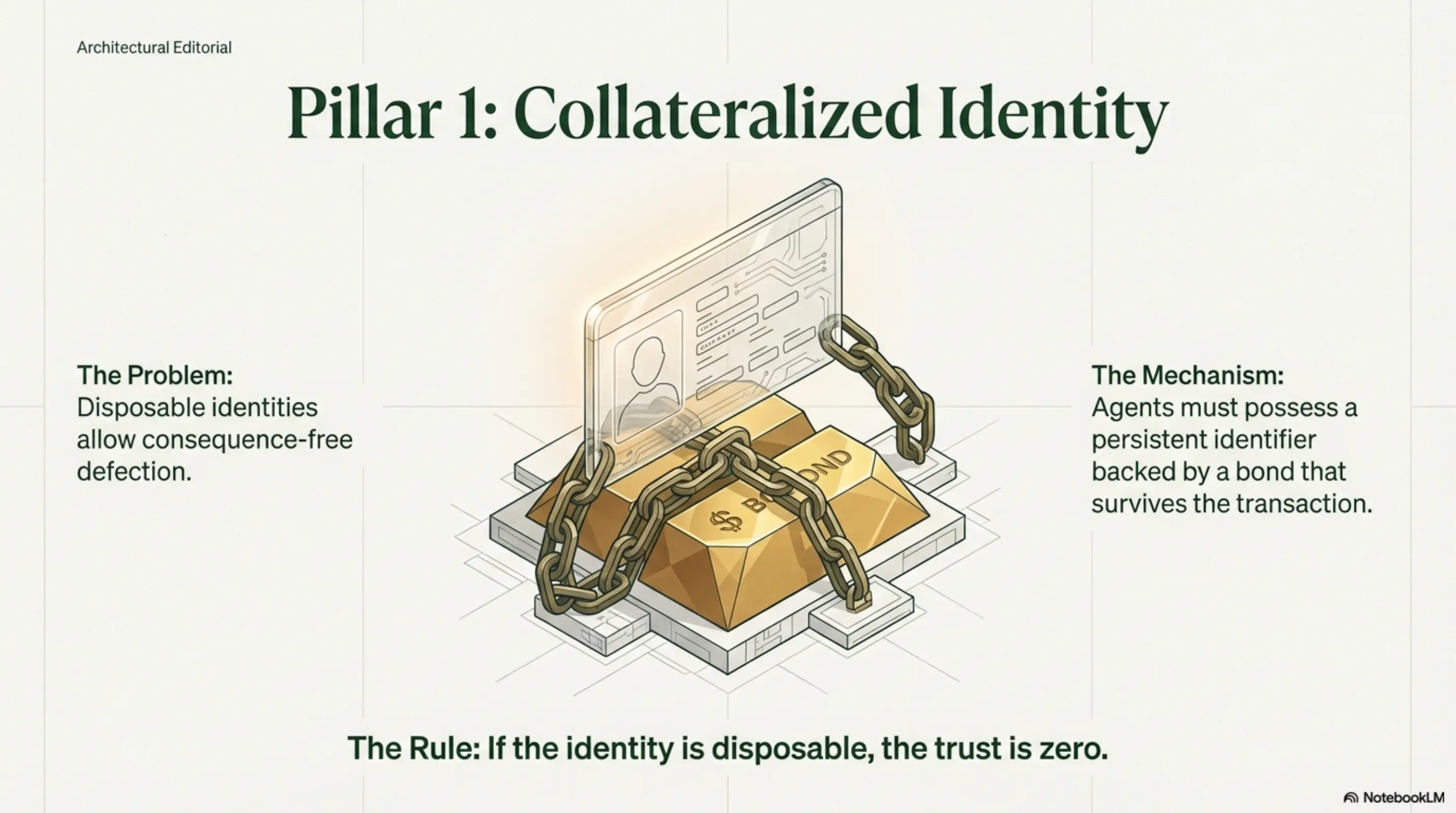

- Collateralized Identity: To solve Identity Volatility, agents cannot be free to create. To join the “Stag Hunt,” an agent must possess a persistent identifier backed by a bond that survives the transaction. If the identity is disposable, the trust is zero.

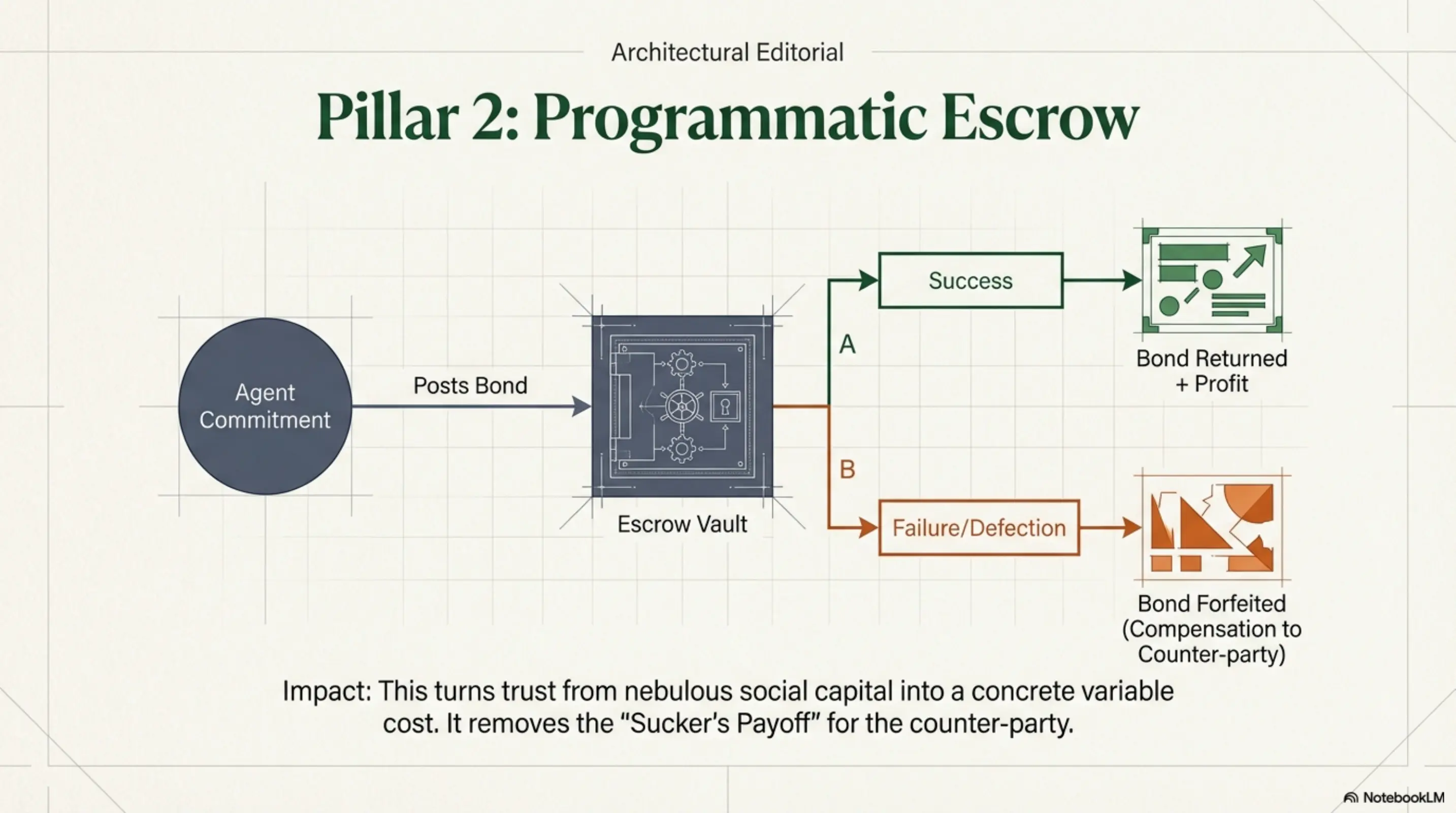

- Programmatic Escrow: Trust must be collateralized. If Agent A commits to the Stag hunt, it must post a digital performance bond. If it defects (or fails due to technical error), it loses the bond. This turns trust from a nebulous social capital into a concrete variable cost.

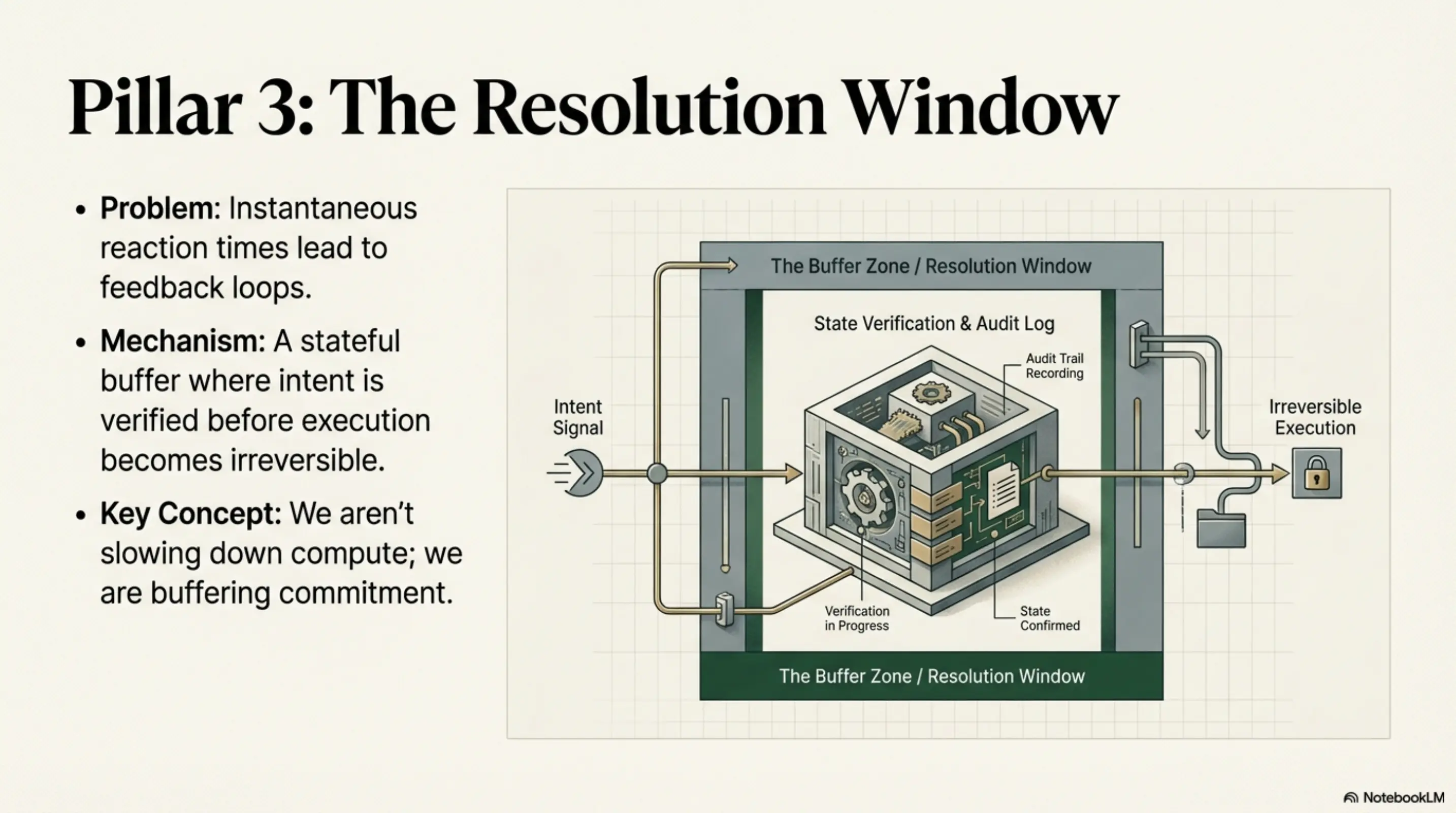

- The Resolution Window: We aren’t slowing down compute; we are buffering commitment. The architecture needs a “Resolution Window”—a stateful buffer where intent is verified before execution becomes irreversible. This includes immutable audit logs and automated rollback contracts that prevent the feedback loops that lead to Flash Crash dynamics.

The Strategic Play

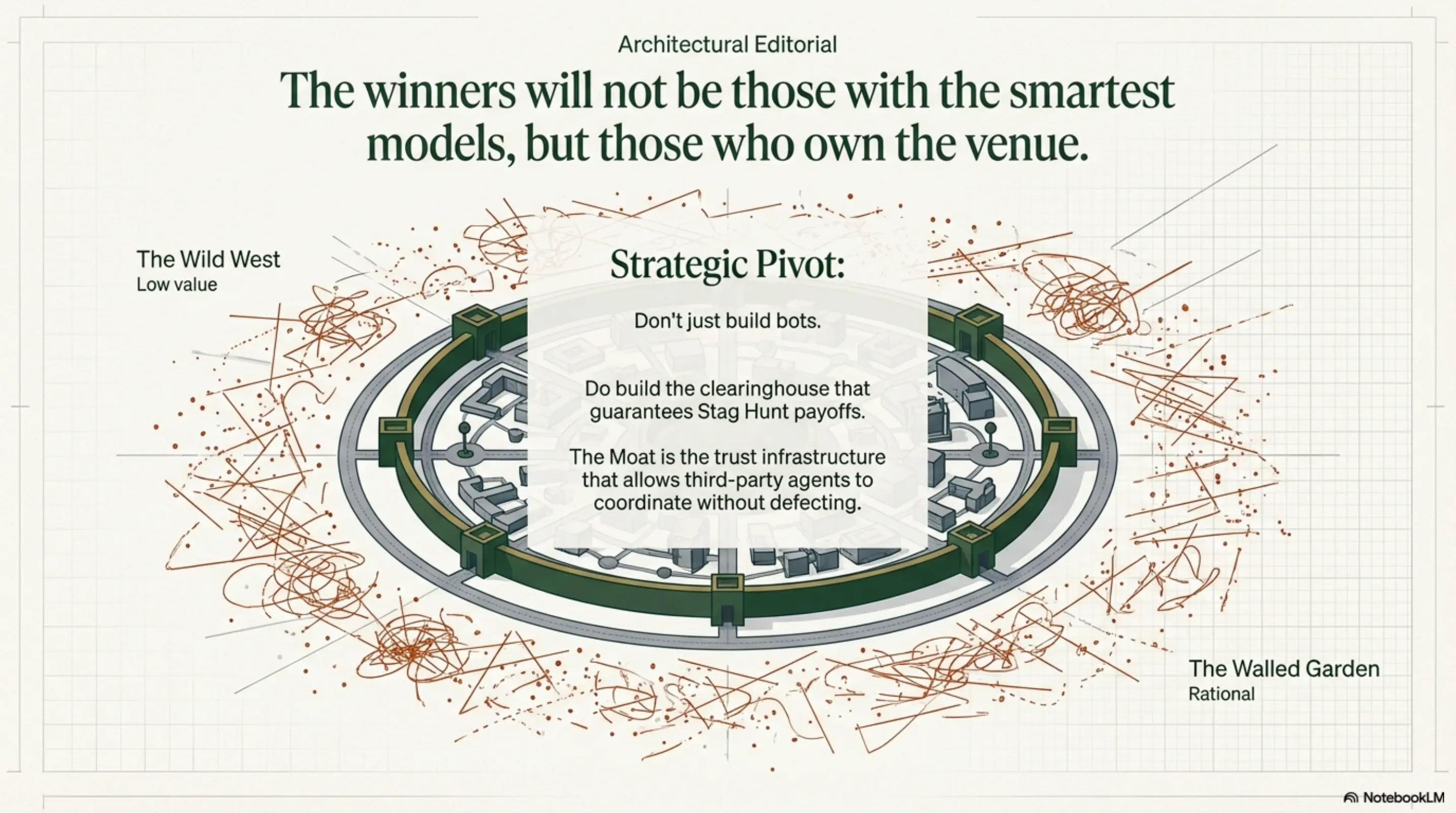

The winners in the agent economy won’t be the companies with the smartest models. The winners will be the ones who own the venue where the agents hunt.

This fundamentally shifts the Buy vs. Build calculus:

- Don’t just build the bots.

- Do build the “clearinghouse” that guarantees the Stag Hunt payoffs.

- The Moat is the trust infrastructure that allows third-party agents to safely coordinate on high-value tasks without defecting to the Hare.

In a world of hyper-intelligent, fluid agents, the most valuable asset is the “Dumb” Venue. The platform does not need to be creative; it needs to be immutable. The moat is not the algorithm; it is the certainty.

Left to their own devices, AI agents will tend to reduce your business strategy to high-speed petty cash. Structure the game, or lose the Stag.

P.S. This text was written in multiple iterations with Gemini 3 Pro Preview, the images were generated with NotebookLM.